Predictive policing, a practice carried out by tools like CrimeScan and PredPol, uses algorithmic systems to predict geographic areas that are most likely to have patterns of criminal behaviors based on data of previous reports of crimes, frequency of 911 calls, and long-term rates of serious violent crime. Given the scholarship regarding the unequal policing of minority neighborhoods, the crime rate of a neighborhood does not just reflect frequency of actual crime, but also the decisions made by law enforcement. When government and police entities suspect more crime in low-income, non-white neighborhoods and patrol these areas at a higher rate, they actively inflate crime statistics. This creates a feedback loop, further identifying minority neighborhoods as “bad” areas that should be policed heavily. Predictive policing is one of many examples of algorithmic decision making systems that perpetuate human biases and seriously disadvantage minorities and low income people. Governments across the U.S. need to stop the rapid implementation of these systems and demand accountability so that discriminatory bias is not further built into society.

Artificial intelligence is not the first example of data-driven policy that has resulted in racial discrimination. Redlining, “the practice of arbitrarily denying or limiting financial services to specific neighborhoods, generally because its residents are people of color or are poor,” used data and geographic analysis as a means of keeping “undesirable” people–coded language for non-white and poor–from living in white neighborhoods. This practice, outlawed in the 1970s, allow responsible entities to privilege high-income, white community members without explicitly targeting racialized groups, using geographic location as a proxy.

The function of automated decision-making systems has come to reflect the legacy of redlining. In recent years, these tools have been rapidly integrated into a number of social institutions that make crucial choices about people’s lives–choices like who gets a loan, which families are investigated for child abuse, and who is granted parole. Many of these algorithms are specifically developed to omit the implicit biases of humans from policing, but the reality is that they often do the opposite, further internalizing and perpetuating such biases. Algorithmic bias exists as a product of human bias. When humans build an algorithmic system, that system “actually masks an underlying series of subjective judgments on the part of the system designers about what data to use, include or exclude, how to weigh the data, and what information to emphasize or deemphasize.” By embedding human biases into learning systems, bias is strengthened and concretized in a way that has harmful effects on real people.

In a time when artificial intelligence is considered the “next big thing”, the lack of existing regulation and extreme compilation of economic capital and power of big tech companies is a matter of concern. Given that these decision-making systems are mostly sourced from private companies that fight to protect their proprietary code, transparency is hard to come by. Another barrier to accountability is that “machine-learning algorithms can constantly evolve, meaning that outputs can change from one moment to the next without any explanation or ability to reverse engineer the decision process.” Automated decision making systems are black-box systems: the processes happening inside of them are difficult–and sometimes impossible–to fully understand. When artificial intelligence systems are taken as objective, benign, and calculated, their outputs are as well–creating confirmation bias. Skewed outcomes as a result of internalized bias are considered fact; and in turn, can be referenced to validate further skewed results. Biased algorithms become a self-perpetuating system.

Social justice advocates like the Brennan Center for Justice and the ACLU have begun to call out governments for this lack of accountability. In December of 2017, the New York City Council passed an algorithmic accountability bill, convening a task force that works to monitor the use of automated systems in the public sector. The push for this bill was a response to the use of the controversial Forensic Statistical Tool to analyze DNA samples, the validity and fairness of which was called into question by a ProPublica report. The report details the 30% margin of error of the FST tool and the fact that it is used in legal battles to sentence criminals, having a potentially disastrous effect on an innocent person’s life.

A previous version of the algorithmic accountability bill forced city agencies to make public the source code of all algorithmic systems of this type but received concerns that it might threaten New York residents’ data privacy and the security advantage of the government, as well as resistance from tech companies to release proprietary code. Ellen Goodman, professor of law at Rutgers University, argues that “New York City has the power [to push back] and insist on preserving the public interests.” This doesn’t necessarily mean mandating public source code, however, but perhaps building contracts with tech companies that require transparency from the start. Julia Powles, a New York University School of Law researcher, was disappointed to see the scale-back of the New York City Council bill, pointing to stricter policies in Europe that require some level of corporate accountability.

American society is plagued by technochauvinism, the idea that the technological solution is always the best one. This is not to say that big data and technological advances don’t offer significant innovative solutions to societal problems, but rather that this is not the case every time. The suggestion is not to halt data and algorithm use, but to interrogate the validity, fairness, and value of algorithmic models and advocate for a conscious and purposeful implementation of them.

As we look forward, we must consider the perpetual potential of discriminatory algorithms. Data-driven policy is inherently backwards-looking. Algorithms can only learn from existing datasets, which are grounded in past experiences and past trends. As it was with redlining, data-driven decisions can be a means of maintaining the status quo. The rapid implementation of automated decision making systems could be a mechanism to program historical discrimination into the future.

The use of algorithmic systems to solve social problems is a political choice, and one that warrants significant reflection. It is crucial that governments stand up and demand accountability from tech companies. Sitting a task force is a laudable first step, but action against discriminatory practices under the guise of objective data science must be more expansive. There are researchers working to build explainable algorithms and accountability checks and balances–not every system must be a black box. A responsible political choice would be to slow the implementation of unevaluable algorithms and privilege the development of responsible automated systems. If tangible steps aren’t taken to dismantle these harmful systems, automated bias may further concretize the implicit human bias that haunt our society in a manner that is no longer checked by human discretion.

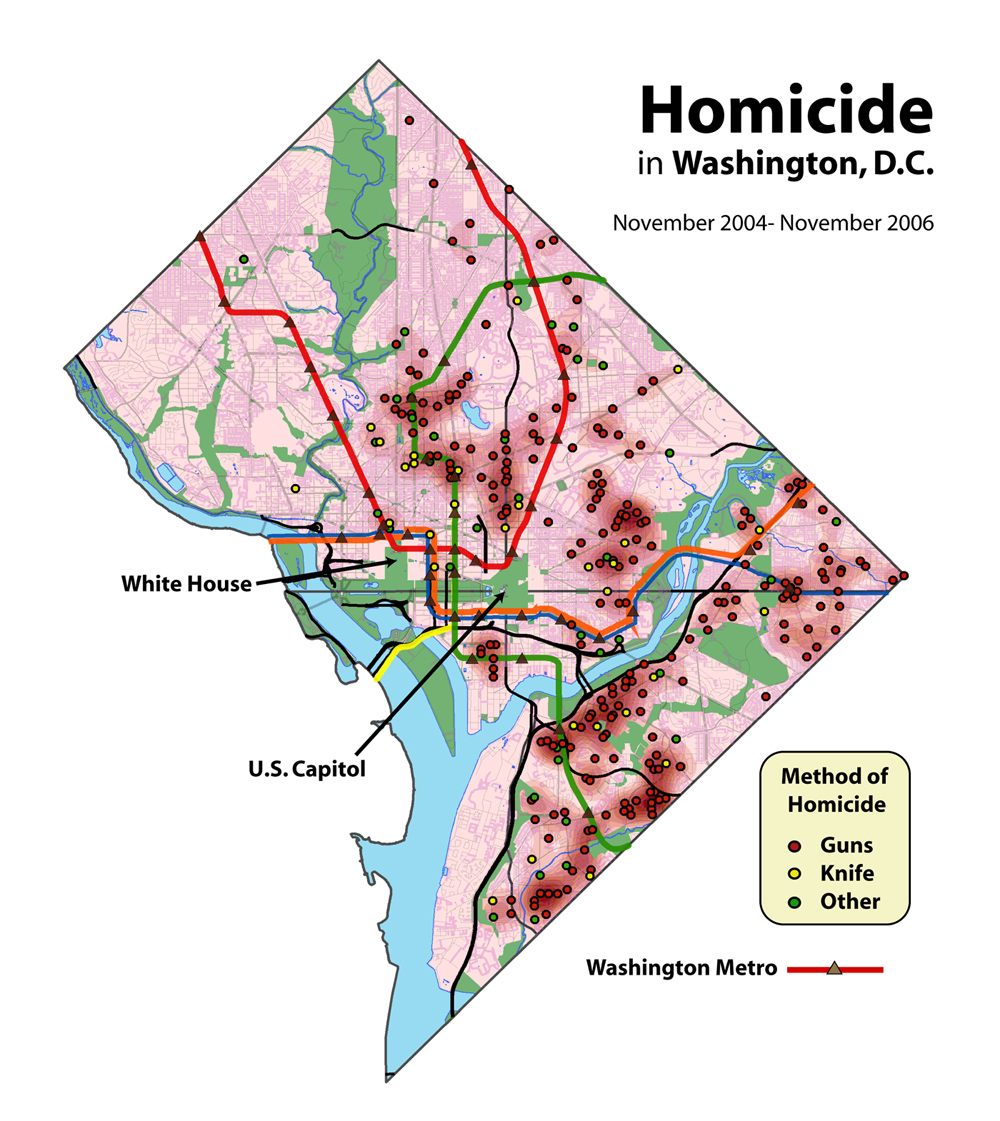

Photo: “Homicide Rates in Washington D.C.“